What Chatbot Bloopers Reveals About the Future of AI

It’s so different seven days make up the creative AI world.

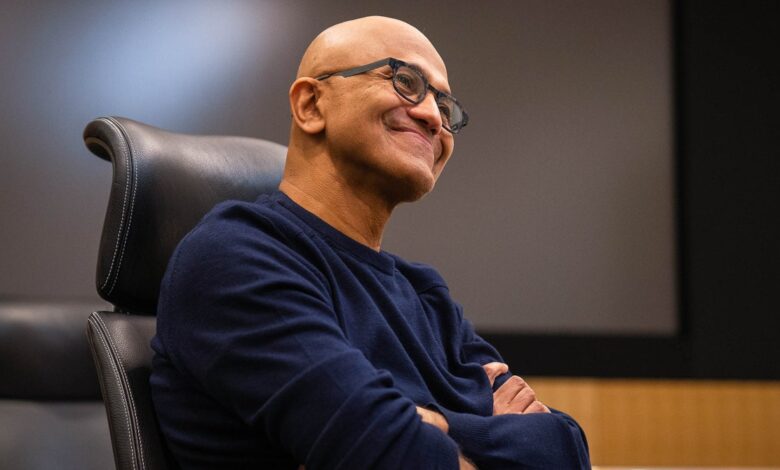

Last week Satya Nadella, CEO of Microsoft, happily told the world that Bing is newly infused with AI Search engines will”make Google jump” by challenging its longstanding dominance in web search.

New Bing uses something called ChatGPT—you may have heard of it—this represents a significant leap in the ability of computers to process language. Thanks to advances in machine learning, it has essentially figured out how to answer all sorts of questions on its own by gobbling up trillions of lines of text, much of it pulled from the web.

In fact, Google danced to Satya’s tune by Bard notices, its answer to ChatGPTand promises to use the technology in its own search results. Baidu, China’s largest search engine, said they are working on similar technology.

But Nadella might want to see where his company is headed.

IN demo that Microsoft gave out last week, Bing seems to be able to use ChatGPT to give complex and comprehensive answers to queries. It offers a route for a trip to Mexico City, generates financial briefs, makes product recommendations that collate information from multiple reviews, and offers advice on whether a piece of furniture should be. Find out if it fits a small van by comparing the sizes posted online.

WIRED had a while during launch to put Bing to the test. And as one keen-eyed expert noticed, some results that Microsoft has shown less impressive than they first appeared. Bing seems to have fabricated some information about the travel itineraries it generates, and it has left out some details that no one can afford to miss. Search engines also mix up Gap’s financial results by confusing gross margin with unadjusted gross margin—a serious mistake for anyone relying on bots to perform tasks. seems to simply summarize the numbers.

More problems surfaced this week, as the new Bing was made available to more beta testers. They seem to include arguing with users about what year and going through an existential crisis when pushed to prove one’s own feelings. Google’s market capitalization amazingly reduced 100 billion dollars after someone noticed an error in the Bard-generated answer in the company’s demo video.

Why do these tech giants make such silly mistakes? It has to do with the strange way that ChatGPT and similar AI models actually work — and the extraordinary hype of the moment.

What is confusing and misleading about ChatGPT and similar models is that they answer questions by making highly educated guesses. ChatGPT generates content that it thinks should follow your questions based on statistical representations of characters, words, and paragraphs. The startup behind the chatbot, OpenAI, has honed that core mechanism to deliver more satisfying answers by having humans give positive feedback whenever the model generates responses. words seem correct.

ChatGPT can be dramatic and interesting, because that process can create the illusion of understanding, which can work well in some use cases. But the same process will “hallucinate” untrue information, a problem that may be one of the most important challenges in technology right now.

The hype and intense expectations surrounding ChatGPT and similar bots increase the risk. As well-funded startups, some of the world’s most valuable companies, and the most prominent leaders in the tech sector all say chatbots are the next big thing in search, many will take it as gospel—motivating conversation starters to double down with more anticipation of the omniscient intelligence of AI. It’s not just chatbots that can get lost due to pattern matching without fact checking.