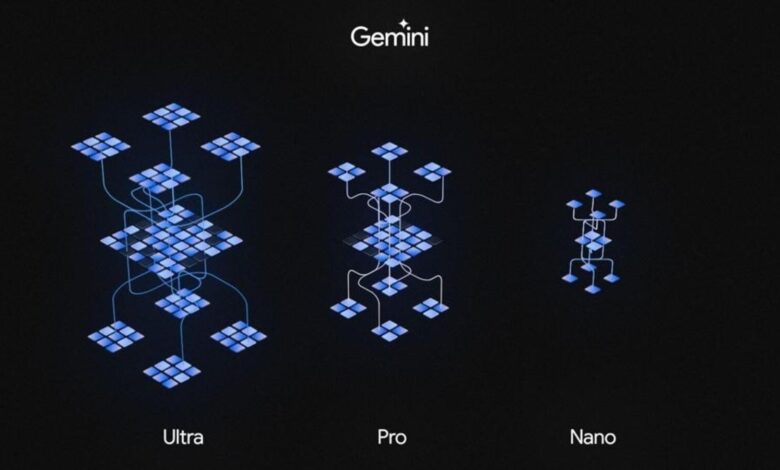

Google admits Gemini AI hands-on demo video was not real and edited to “inspire developers”

Alongside unveiling its latest foundation model, Gemini, and making big claims about its capabilities, Google also released a demo video on YouTube, where a user can be seen doing various activities on a table, and the AI was able to recognize it instantly and verbally respond. The shocking video was met with awe because no one in the industry has been able to achieve such results from an AI model so far, and likely nobody will for a long time – including Google. It turns out the video was not entirely real and Google edited the Gemini AI demo video and added elements to make it seem more advanced than it really is.

Questions were raised on the demo video first by a Bloomberg op-ed by Parmy Olsen who claimed that Google was misrepresenting the capabilities of its Gemini AI in the demo. Later, The Verge reached out to a Google spokesperson who then referred to a post on X by Oriol Vinyals, the vice president of Research & Deep Learning Lead at Google DeepMind. He is also the co-lead for the Gemini project.

In a post, he said, “All the user prompts and outputs in the video are real, shortened for brevity. The video illustrates what the multimodal user experiences built with Gemini could look like. We made it to inspire developers”.

Google admits to editing its Gemini AI demo video

Bloomberg received more information on how exactly it was done from another Google spokesperson who revealed that the results in the video were achieved by “using still image frames from the footage, and prompting via text”.

These claims are quite concerning. Editing videos to remove the snag and slight enhancements is something many do, however, adding elements to make it appear like it has new core competencies can be considered misleading. On top of that, it also becomes obvious that apart from certain parts of the video, it is also not that impressive.

Another deadly blow was delivered by Ethan Mollick, a Wharton professor, on X who showed here and here that ChatGPT Plus was capable of giving similar results when presented with an image-based prompt. He said, “The video part is quite cool, but I have difficulty believing that there is no prompting happening here behind the scenes. GPT-4 class AIs are good at interpreting intent, but not with as much shifting context. GPT-4 doesn’t do video, but seems to give similar answers to Gemini”.

Many have reacted to Vinyal’s post on “brevity” and expressed their disappointment at realizing that the video was not real. One X user replied, “If you want to inspire developers then why don’t you post factual content? The prompts can’t be “real” and shortened at the same time. It was disingenuous and misleading”.