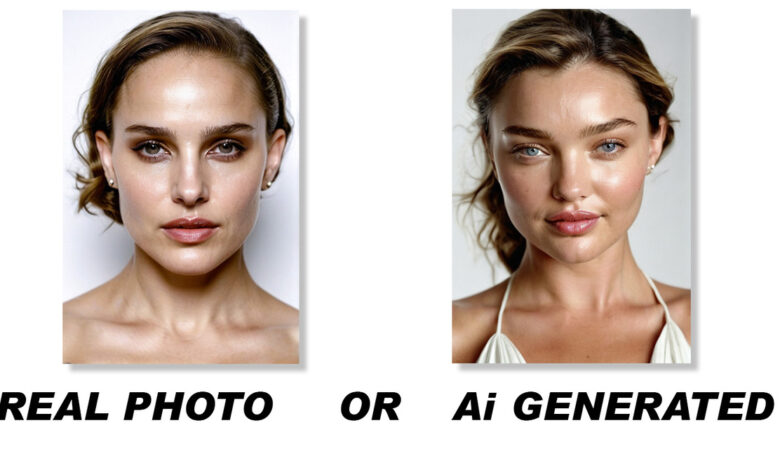

People Can’t Identify Fake AI Faces From Real Headshots

AI art-generating programs are progressively getting better and better at creating hyper-realistic images. For the last few months, I have been using AI to create headshot images of completely fake people with the lighting style of a few famous photographers. In this social experiement, I wanted to see if the average person could tell the difference between images of real people photographed by real photographers verses imaginary people created entirely with artificial intelligence. Take my quiz and view the results in what I call “Real vs AI Headshots.”

Every day, more and more hig- profile whistleblowers continue to share their dire concerns about how artificial intelligence is going to shape the future for humanity. We’ve all seen the interviews with people like Elon Musk, Demis Hassabis, Geoffrey Hinton, Sam Harris, and Mo Gawdat who consistently warn that AI-based programs like OpenAI’s Chat GPT, Google’s DeepMind, and IBM’s Watson are only the tip of the iceberg in a race pitting computer science against human development. While these programs are no doubt leaders in the AI space, as a photographer, I’m much more interested in what artificial intelligence is doing to the creative world, specifically photography and videography.

Like many other photographers, I first became aware of AI art-generating programs back in the summer of 2022. Programs like Midjourney and DALL·E 2 were pretty impressive, but the results were still often cartoonish, overly mystical, and lacked the realism to seem like a real threat to the world of traditional photography. Over the last 12 months, these programs have gotten better and better at reproducing photorealistic images with both text-only prompts and image-to-image renderings. It is actually pretty comical to go back and rewatch any YouTube content from 2022 predicting where AI generation will be in a year and seeing where we have actually wound up. Comical might be too benign of a word to use; perhaps naïve or even shortsighted might be a better perspective on how fast this technology wound up progressing in just 12 short months.

So, how far along has AI-generated imagery come, and where does it stand today at the end of 2023? This was the question I sought to answer when I came up with my latest social experiment. Instead of asking Fstoppers readers, who are often full-time photographers, I wanted to see how my realistic but completely fake AI images of people were received by average, everyday people who aren’t make a living with photography. Would they immediately recognize the soullessness behind my creations, or would they be fooled into believing they were infact real people captured by real photographers?

Take The Test For Yourself

Before explaining how these images were created or any of the specific results, if you want to take a digital version of this test, you can click the image below. The 44-image test consists of a mixture of real photographs taken by real photographers and AI images I created using the image-generating program Stable Diffusion. I’ve set a time limit of eight seconds per image to simulate the average glance most people would give any given photograph. Be aware though, as soon as you click the quiz button, the first image will appear and the timer will start.

How the Test Was Created

I never intended to create any sort of test pitting real photography against AI-created art simply because, at the time, I didn’t think AI-generated images were good enough to fool anyone. It wasn’t until I switched over from Midjourney to Stable Diffusion that I started to realize just how far along this technology had come. For me, Midjourney’s images were simply way too stylized for anyone to find them real. The colors were too vibrant, people’s skin was way too fake, faces were far too perfect with few imperfections, and overall, they looked more like something out of the movie Avatar than a print from a Richard Avadon medium format negative. Stable Diffusion changed all of that, though.

I’m not the most tech-savvy photographer, so installing everything need to get Stable Diffusion was pretty challenging. Unlike Midjourney or Open AI’s DALL·E software that basically works the same for everyone, Stable Diffusion is based around open source programs and requires you to become familiar with software jargon like Python, Github, and special AI-training plugins called Loras, style models, and variational autoencoders (VAE). If that’s not complicated enough, you can also install different graphical user interfaces to help make your workflow more visual instead of code-based. I wound up installing one called Comfy and primarily used a custom node or workspace called SeargeSDXL. I only mention this in the event you are as curious about Stable Diffusion as I was, because it’s honestly not as intuitive and user-friendly as the aforementioned Midjourney and DALL·E AI-generating programs.

I spent the better part of a month tweaking and testing my workflow, but eventually, I was able to produce some truly spectacular AI-created headshots. All of my images were based around the photographic styles of two of the most famous headshot photographers, Peter Hurley and Martin Schoeller. Although I used word prompts only, because these two photographers have such a massive body of work online, the AI software was able to emulate their styles pretty easily. In order to get realistic renderings of human eyes, hair, and skin, I often had to employ different loRA models, upscalers, and refiner models. This took a ton of time, and honestly, even after I perfected a handful of images, I found myself constantly tweaking all these settings over and over again.

My goal was to generate over 100 usable AI-created headshots. Initially, I relied loRAs that other Stable Diffusion users had created that were trained to perfectly create celebrities, models, actors, and even porn stars (because this space is very heavily used to create NSFW imagery). After generating a handful of images that looked exactly like well-known people, I started scaling back those AI-trained models to just insert maybe 20% of someone’s likeness in hopes of creating more generic-looking people. For my final test, I included a few of the celebrity doppelgangers because Peter Hurley and Martin Schoeller are both known for working with the real people in their own work.

For the real photographs, I simply mixed in a wide variety of actual photographs taken by real photographers. A few images come from Peter himself, and others come from his headshot teaching platform The Headshot Crew. The goal was to evenly mix real images with AI-generated images and keep the styling consistent with Peter and Martin’s work only. Because both of these photographers are known for very stylized headshots, I wanted everyone who took my test to be limited to only seeing these types of headshots. In order to make sure every participant was fully aware of the photo aesthetic they were about to be tested on, I briefed each person on Peter and Martin’s work first by letting them explore each of their websites.

The Medium

The easy way to run this test would be to simply create an online test or quiz and let each participant quickly click through a bunch of images and see the results. I’ve created that test above that you can run for yourself, but I wanted to make this experience more hands on. In order to make this a more intimate and visceral experience, I wound up printing all of these headshots through the photo-printing website Saal Digital. I’ve used Saal Digital in the past to create portfolio albums, posters, and photo art for my walls, so I knew the quality of their prints would give this test the immersive experience I wanted instead of simply handing someone a mouse or iPad and having them click through a screen.

Each image was printed as a 4×6 photograph on museum-grade FujiFilm Crystal Archive DP II Matte Paper. This paper not only brought all the colors and details of each image to life, but the matte paper also prevented fingerprints from ruining the experience for the next viewer. Even if only subconsciously, I think that seeing all of these images in a real, tangible print also made each participant feel more connected to the work. They became more real and valuable compared to just a JPEG on a computer screen.

I want to thank Saal Digital for sponsoring these prints for this test and being patient with me as I created this entire project. This took far longer than I ever could have imagined, but it was an experiment that I thought was extremely important to run and test. Regardless, if you are a professional photographer or just someone who enjoys physical prints of your own family and memories, I’d encourage you to continue printing your own photographs in a real, physical format. If you want to see all the print options Saal Digital offers, you can check out their website here. At the writing of this article, Saal Digital is offering Fstoppers readers a whopping 50% off all their products through this discount page.

The Results

Coming from a pre-medical background in college, I was super excited to not only run this photography-based experiment, but I was also curious to see if the hyperrealistic AI images I had created were good enough to fool the average person. The main question I wanted to test was: “are AI created photos using only word prompts good enough to fool the average person into believing they are real people?” Notice that I’m not as interested in seeing if professional photographers can tell the difference but rather the normal, everyday person.

My test sample in this video was fairly small with only about 20 people from my neighborhood. In the weeks before publishing this article, I have opened up my online digital quiz to dozens more people, and the results are pretty consistent. On average, most people are scoring about 40-60% when shown 44 images. In my test group of 6 elementary and high school age kids, the average jumped up to around 50-74%, but I’d like to get more participants to see if this increased perceptiveness holds true or if it’s just an error based on a small sample size (and testing above average children).

I’m excited to see and post more results as this online quiz circulates around the internet. I’m prepared for it to be skewed a bit because of the format change and perhaps the difference in how digital images are displayed versus printed photographs. Also, since this quiz is originating from a professional photography website, I’m sure the results will be skewed a bit based on the occupation of many of the test-takers. However, I’m hoping in a few weeks time the number of people who participate in this quiz will be large enough to start building some strong opinions on how AI art generating programs are affecting people’s perception of reality.

When I was a kid, I remember hearing a photograph is worth a thousand words. But today, with AI being able to create anything you can imagine in a truth-defying manner, we are inching closer and closer to a photograph being worth whatever the human mind wants to believe it to be worth. If AI-generated art can now blur or outright change the ability to determine truth and honesty, we might find ourselves living in a very scary and apocalyptical world sooner than we thought.