AvatarCLIP: Zero-Shot Directional Animation and 3D Avatar Animation

Electronic Avatar is an important part of the film, game and fashion industries. A recent paper published on arXiv.org recommends AvatarCLIP, which can create and create 3D animations from only natural language descriptions. This is the first full text-driven avatar compositing process that includes the creation of shapes, textures, and movements.

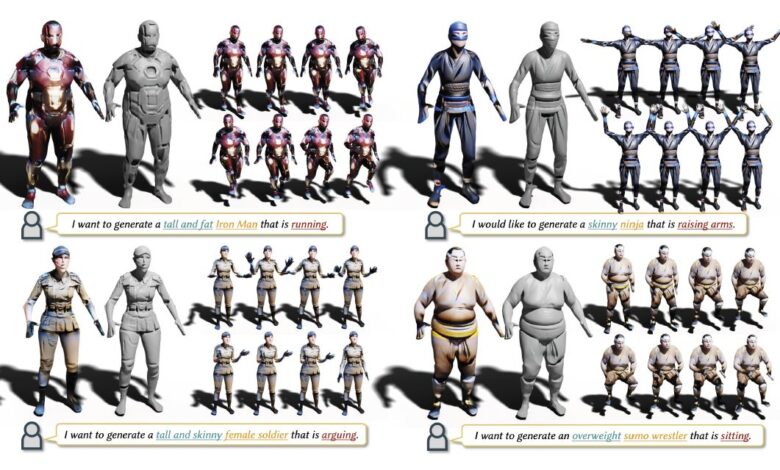

Driven by natural language descriptions

for the desired shape, appearance and motion of the avatar, AvatarCLIP is capable of powerfully creating 3D avatar models with vivid textures, high quality

geometry and logical motion. Image credit: arXiv: 2205.08535 [cs.CV]

AvatarCLIP uses the power of pre-trained large-scale models to achieve powerful aerial imaging. It powerfully creates 3D ready-made avatars with high quality textures and geometry. The researchers also propose a new no-shot written guided reference motion synthesis approach.

Qualitative and quantitative experiments confirm that the generated avatars and motions are of higher quality than currently available methods. Furthermore, the outputs are in good agreement with the corresponding input natural languages.

Creating 3D avatars plays an important role in the digital age. However, the whole manufacturing process is very time consuming and laborious. To democratize this technology to a wider audience, we recommend AvatarCLIP, a zero text-oriented framework for avatar creation and 3D animation. Unlike professional software that requires specialized knowledge, AvatarCLIP allows general users to customize a 3D avatar with the desired shape and texture, and control the avatar according to the movements specified described only in natural languages. Our key insight is to leverage powerful visual-language modeling CLIP to monitor human neurogenesis, in 3D geometry, textures, and animations. Specifically, motivated by natural language description, we set out to create 3D human geometry using a shape VAE network. Based on the generated 3D human shapes, a volume rendering model is used to further facilitate geometric sculpting and texturing. Furthermore, by leveraging principles learned in motion VAE, a CLIP-guided reference-based motion compositing method is proposed for the animation of the generated 3D avatar. Extensive qualitative and quantitative experiments confirm the effectiveness and generalizability of AvatarCLIP across multiple avatars. Notably, AvatarCLIP can create invisible 3D avatars with novel animations, achieving superior aerial photography.

Research articles: Hong, F., Zhang, M., Pan, L., Cai, Z., Yang, L. and Liu, Z., “AvatarCLIP: Zero-Shot Text-Driven Generation and Animation of 3D Avatars”, 2022. Contact conclude: https://arxiv.org/abs/2205.08535

Project page: https://hongfz16.github.io/projects/AvatarCLIP.html