AMD reveals MI300x AI chip as ‘generational AI accelerator’

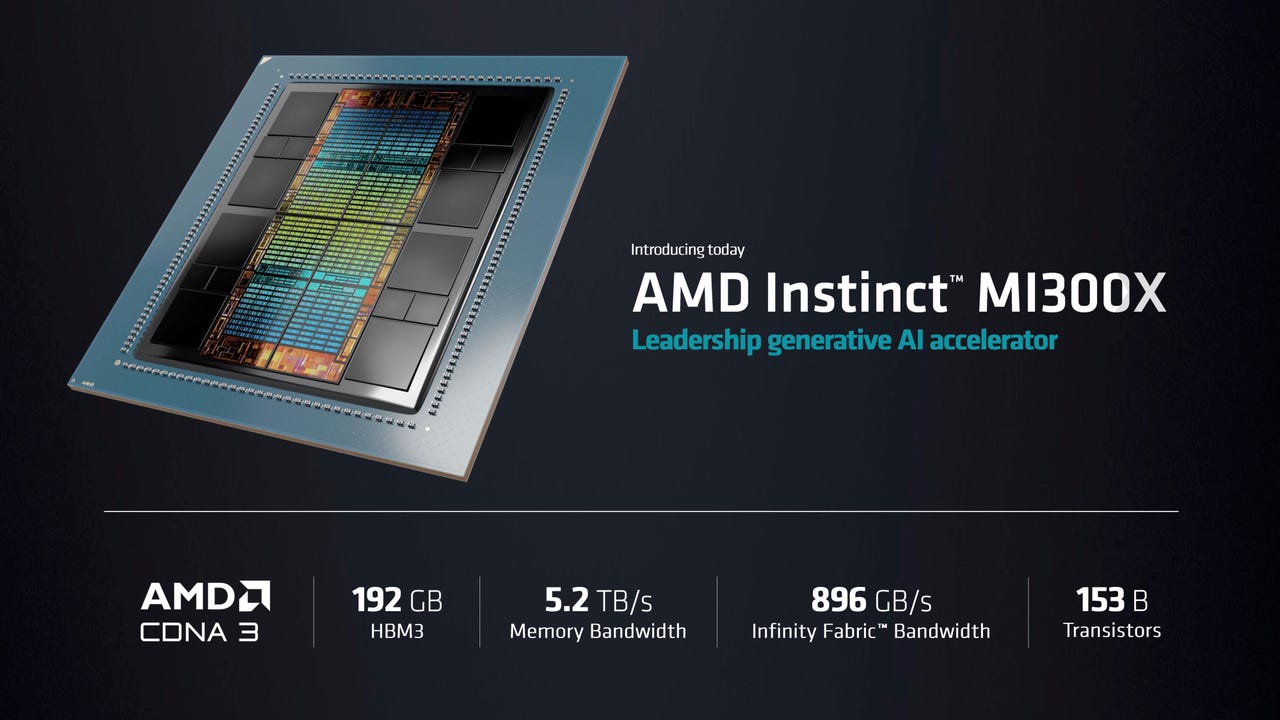

AMD’s Instinct MI300X GPU features multiple GPU “chiplets” along with 192 gigabytes of HBM3 DRAM memory and 5.2 terabytes per second of memory bandwidth. The company says it is the only chip that can handle large language models with up to 80 billion parameters in memory. AMD

Advanced Micro Devices CEO Lisa Su on Tuesday in San Francisco unveiled a chip that is central to the company’s strategy for artificial intelligence computers, boasting huge memory and data throughput for the so-called artificial intelligence tasks as large language models.

The Instinct MI300X, as the part is known, is the follow-up to the previously announced MI300A. The chip is actually a combination of many “chiplets,” individual chips that are bundled together in a single package using shared memory and network links.

Also: 5 ways to explore innovative uses of AI in the workplace

Su, on the invite-only stage at the Fairmont Hotel in downtown San Francisco, called the unit a “next generation AI accelerator” and said the GPU chiplets contained within it, a they are called cDNA 3, which is “specially designed”. for AI and HPC [high-performance computing] amount of work.”

The MI300X is the “GPU only” version of this part. The MI300A is a combination of three Zen4 CPUs with multiple GPUs. But in the MI300X, the CPUs are swapped for two additional CDNA 3 triples.

Also: Nvidia reveals new Ethernet for AI, Grace Hopper ‘Superchip’ is in full production

The MI300X increased the number of transistors from 146 billion transistors to 153 billion, and the shared DRAM memory was increased from 128 gigabytes in the MI300A to 192 gigabytes.

Memory bandwidth is increased from 800 gigabytes per second to 5.2 terabytes per second.

“Our use of chiplets in this product is very, very strategic because of the ability to mix and match different types of computers, swap CPUs or GPUs,” said Su.

Su says the MI300X will offer 2.4 times the memory density of Nvidia’s H100 “Hopper” GPU and 1.6 times the memory bandwidth.

“General AI, big language models have changed the game,” says Su. “The need for more computation is growing exponentially, whether you’re talking about training or about inference.”

To demonstrate the need for powerful computers, Sue showed work on what she says is the most popular large language model at the moment, the open-source Falcon-40B. Language models require more computation as they are built with more and more what are called neural network “parameters”. Falcon-40B includes 40 billion parameters.

Also: GPT-3.5 vs GPT-4: Is ChatGPT Plus worth the subscription fee?

The MI300X is the first chip powerful enough to run a neural network of this size, entirely in memory, instead of having to move data, back and forth, from external memory, she said.

Su performed MI300X composing a poem about San Francisco using Falcon-40B.

“A single MI300X can run models with up to about 80 billion parameters” in memory, she says.

“When you compare the MI300X with the competition, the MI300X offers 2.4 times the memory and 1.6 times the memory bandwidth, and with all that extra memory capacity, we really benefit. alternative to large language models because we can run larger models directly in memory.”

Also: How ChatGPT can rewrite and improve your existing code

To be able to run the entire model in memory, says Su, means “for the largest models, it really reduces the number of GPUs you need, dramatically accelerating performance, especially in terms of performance.” for inference, as well as reducing the total cost of ownership.”

“By the way, I like this chip,” said Su excitedly. “We love this chip.”

“With the MI300X you can reduce the number of GPUs, and as model sizes continue to grow, this will become even more important.”

“With more memory, more memory bandwidth, and less GPU required, we can run more inference tasks per GPU than before,” says Su. That would reduce the total cost of ownership for large language models, she said, making the technology more accessible.

Also: Because of AI’s ‘iPhone moment’, Nvidia unveils massive language modeling chip

To compete with Nvidia’s DGX systems, Su unveiled a line of AI computing, the “AMD Instinct Platform”. The first version will combine eight MI300X with 1.5 terabytes of HMB3 memory. The server complies with the industry standard Open Computing Platform specification.

“For customers, they can use all of this AI computing power in memory in an industry-standard platform that sits right inside their existing infrastructure,” says Su.

Unlike the MI300X, which is just a single GPU, the current MI300A is going up against Nvidia’s Grace Hopper combo chip, which uses Nvidia’s Grace CPU and its Hopper GPU, which the company says is not the same thing. announced last month is in full production.

MI300A is being integrated into the El Capitan supercomputer being built at the Department of Energy’s Lawrence Livermore National Laboratory, Su noted.

Also: How to use ChatGPT to create apps

Su said the MI300A is currently on display as a sample to AMD customers, and the MI300X will begin sampling to customers in the third quarter of this year. She said both will be in mass production in the fourth quarter.

You can watch the presentation playback on the Website set up by AMD for reporting.