DeepMind’s Perceiver AR: a step forward for AI efficiency

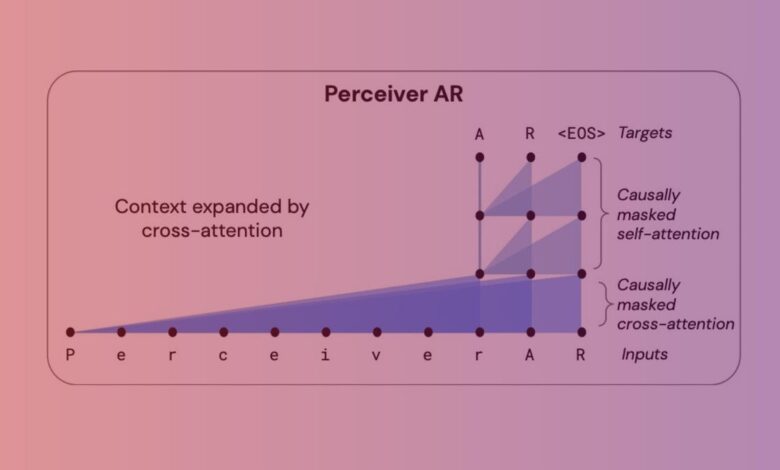

The sensor-based AR architecture of DeepMind and Google Brain reduces the task of computing the combinatorial nature of inputs and outputs into a latent space, but with the difference that the latent space has a “mask”. causality” to add the auto-regression order of a typical Transformer. DeepMind / Google Brain

One of the alarming aspects of the hugely popular deep learning segment of artificial intelligence is the growing size of programs. Experts in the field say that computing tasks are intended to get bigger and bigger because scale matters.

Such larger and larger programs are resourceful, and it is an important issue in the ethics of deep learning for society, a dilemma that has attracted the attention of mainstream scientific journals like Nature.

That’s why it’s interesting any time that the term efficiency is introduced, such as, Can we make this AI program more efficient?

Scientists at DeepMind and at Google’s Brain division, recently adapted a neural network that they introduced last year, Perceiverto make it more efficient in terms of its computing power requirements.

The new program, Perceiver AR, is named for the “self-defeating” aspect of a growing number of deep learning programs. Autoregression is a technique for a machine to use its output as new input to the program, a recursive operation that forms an attention map of how many elements relate to each other.

Transformer, the hugely popular neural network that Google introduced in 2017, has this auto-recovery aspect. And many models since, including GPT-3 and the first version of the Transceiver.

Perceiver AR follows the second version of Perceiver, called Perceiver IO, introduced in March, and the original Perceiver a year ago this month.

The innovation of the original perceptor was to take the Transformer and modify it to allow it to use all sorts of things inputinclude audio text and images, in a flexible form, rather than being limited to a specific input type, for which separate types of neural networks are often developed.

Perceiver is one of a growing number of programs that use autoregressive attention mechanisms to combine different input methods and different task domains. Other examples include Google’s Pathways, DeepMind’s Gatoand by Meta data2vec.

Also: DeepMind’s ‘Gato’ is mediocre, so why did they build it?

Then, in March, the same team of Andrew Jaegle and colleagues built Perceiver, introduce the “IO” versionthis has enhanced output of Transceivers to accommodate more than categorization, achieving a wide range of outputs with all types of constructs, from textual language outputs to optical stream fields to audiovisual sequences to unsigned sets. symbolic self. It can even create motion in the game StarCraft II.

Now, in the article, Long-term, general-purpose contextual autorecovery model with Perceiver ARJaegle and team face the question of how models will scale as they become more and more ambitious in those multimodal input and output tasks.

The problem is, the quality of autoregression of Transformers and any other program that builds attention maps from input to output, is that it requires extremely large scales of distributions over hundreds of thousands element.

It’s the Achilles’ Heel of Attention, precisely the need to pay attention to anything and everything to assemble the probability distributions that make up the attention map.

Also: Meta ‘data2vec’ is a step towards One Neural Network to Rule All

As Jaegle and team said, it becomes a nightmare of scale in computer terms as the number of things that have to be compared in the input increases:

There is a tension between the type of contextual, long form structure and the computational properties of Transformers. Continuity transformers apply self-attention to their inputs: this leads to simultaneous computation requirements that increase quadratic with input length and linearly with model depth. As the input data grows longer, more input tokens are needed to observe it, and as the elements in the input data become more sophisticated and complex, more depth is needed to model. transform patterns that produce results. Computational constraints force the user of the Transformer to truncate the inputs to the model (preventing the model from observing a wide variety of long-range samples) or to restrict the depth of the model (which loses power) expressions needed to model complex patterns).

In fact, the original Transceiver gave improved efficiency over the Transformer by making attention to the latent input representation, rather than the direct one. That has the effect of “[decoupling] The computational requirements of processing a large input array range from those required to create a very deep network. ”

Comparison of the Perceiver AR with the standard Transformer deep network and the enhanced Transformer XL. DeepMind / Google Brain

The latent part, where representations of the input are compressed, becomes a more effective kind of tool for attracting attention, thus, “For deep networks, the self-attention stack is where the latent part occurs. large computation” instead of operating on an infinite number of inputs.

But the challenge remains that the Transceiver can’t produce the output the way the Transformer does because that latent representation has no sense of order, and order is essential in autoscaling. Regression. Each output is said to be a product of what has come before it, not after.

Also: Google reveals ‘Pathways’, a next-generation AI that can be trained to multitask

They write, “However, because each model latent pays attention to all inputs regardless of position, the Transceiver cannot be used directly to generate autoregression, which requires each the model’s output depends only on the inputs that precede it in sequence,” they write.

With Perceiver AR, the team goes further and inserts order into the Transceiver to make it capable of performing that autoregression function.

The key is what’s known as the “causal mask” of both the input, where “cross-attention and latent representation take place, to force the program to only engage in things that precede a certain symbol.” That approach restores the directional quality of the Transformer, but with much less computation.

The result is the ability to do what Transformers do on more inputs but with greatly improved performance.

They write: “Perceiver AR can learn to perfectly recognize long context patterns over a distance of at least 100k tokens in an aggregate replication task”, compared to a hard limit of 2,048 tokens for Transformer, where more tokens equals longer context, which will equal more sophistication in program output.

Also: AI in 60 seconds

And the Perceiver AR does so with “improved efficiency over the Transformer and Transformer-XL architectures only for the widely used decoder and the ability to change the computer used at the time of the test.” experience to match the target budget.”

Specifically, the time on the wall clock to compute the Perceiver AR, they write, is significantly reduced for the same amount of attention and a much greater likelihood of getting context – more input symbols – with the same a calculated budget:

Transformer is limited to a context length of 2,048 tokens, even with only 6 classes—larger models and larger context lengths require too much memory. Using the same 6-layer configuration, we can scale the Transformer-XL memory to a total context length of 8,192. Perceiver AR scales to 65k context lengths and can be scaled to over 100k contexts with further optimization.

All that means is computing flexibility: “This gives us more control over how much computing is used for a given model at the time of testing, and allows us to strike a balance between speed with smooth performance.”

Jaegle and colleagues write that this approach can be used on any type of input, not just word symbols, e.g. pixels of an image:

The same procedure can be applied to any input that can be ordered, as long as a mask is applied. For example, the RGB channels of an image can be arranged in raster scan order, by decoding the R, G, and B color channels for each pixel in the sequence, or even under different permutations.

Also: The ethics of AI: The benefits and risks of artificial intelligence

The authors see huge potential for Perceiver to get anywhere, writing that “Perceiver AR is a good candidate for a long-term, general-purpose range automation model”.

However, there is another problem in the efficiency factor of the computer. Several recent attempts, the authors note, have attempted to cut the computational budget for autoregressive attention by using “sparse”, the process of limiting which inputs have meaningful.

At the same wall clock time, Perceiver AR can run more symbols from the input through the same number of layers, or run the same number of input symbols while requiring less computation time – a way flexibility which the authors believe can be a common approach to greater efficiency in large networks. DeepMind / Google Brain

That has some downsides, being essentially too rigid. The disadvantage of methods that use sparsity, they write, is that this sparsity must be manually adjusted or generated using tests that are often domain-specific and can be difficult to tune. That includes efforts like OpenAI and Nvidia’s 2019″Transformers. “

In contrast, our work does not create a hand-crafted sparse model on attention layers, they write, but allows the network to learn which long-context inputs to join and propagate across. network”.

“The initial cross-attendance operation, which reduces the number of slots in the chain, can be viewed as a learned form of sparseness,” they added.

It is possible that sparsity has been learned in this way, which in itself could be a powerful tool in the toolkit of deep learning models in the years to come.