Researchers release open source optical real-time simulator for autonomous driving

MIT scientists reveal the first open-source simulation engine capable of building a real-world environment for training and testing deployable autonomous vehicles.

The hyper-realistic virtual world heralded as the best driving school for autonomous vehicle (AV) because they have proven effective test beds for safely trying out dangerous driving situations. Tesla, Waymo, and other self-driving companies all rely heavily on data to enable expensive and proprietary photorealistic simulators, as nuanced I-near-missing testing and data collection is often not the easiest or most desirable way to recreate.

VISTA 2.0 is an open source simulation tool that can create a realistic environment for training and testing self-driving cars. Image credit: MIT CSAIL.

To that end, scientists from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) created “VISTA 2.0,” a data-driven simulation engine where methods vehicles can learn to drive in the real world and recover from near-collision situations. Furthermore, all code is being made available open source to the public.

“Today, only companies have software such as the simulation environment type and VISTA 2.0 capabilities, and this software is proprietary. With this release, the research community will have access to a powerful new tool to advance the research and development process of powerful controls adapted for autonomous driving,” said Prof. MIT and CSAIL Director Daniela Rus, senior author of the paper on the study.

VISTA 2.0 builds on the team’s previous model, VISTA, and it’s fundamentally different from existing AV simulators because it’s data-driven – meaning it’s built on and rendered with real-world visuals. reality from real-world data – thus enabling live streaming to reality. Although the first iteration only supported a single car lane with a single camera sensor, achieving high-fidelity data-driven simulation required a fundamental rethink of how the data are aggregated. various sensors and behavioral interactions.

Enter VISTA 2.0: a data control system that can simulate complex sensor types and large-scale interaction scenarios and junctions. With much less data than previous models, the team was able to train autonomous vehicles that can be significantly more powerful than those trained on large amounts of real-world data. .

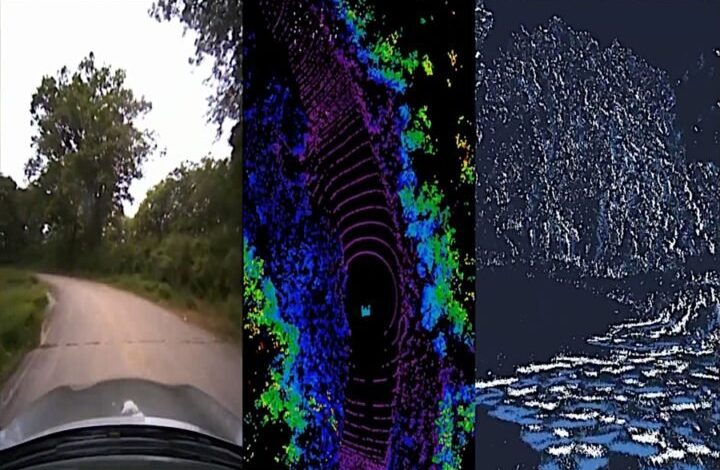

“This is a huge step forward in data-driven simulation for autonomous vehicles, as well as an increase in scale and capabilities,” said Alexander Amini, CSAIL PhD student and co-author on two new papers. ability to handle the complexity of driving. along with graduate student Tsun-Hsuan Wang. “VISTA 2.0 demonstrates the ability to simulate sensor data far beyond 2D RGB cameras, but also ultra-high-size 3D displays with millions of dots, unusually timed event-based cameras, and even both interactive and dynamic scenarios with other media.”

The team was able to scale the complexity of interactive driving tasks for things like overtaking, following, and negotiating, including multi-method scenarios in highly realistic environments.

Training AI models for self-driving cars is difficult to secure for various types of dangerous cases and strange, dangerous situations, because most of our data ( thankfully) is just a daily driving activity. Logically, we can’t crash into other cars just to teach a neural network how not to crash into other cars.

Recently, there has been a shift from more classical, human-designed simulation environments to those built from real-world data. The latter has tremendous photorealism, but the former can be easily modeled for virtual cameras and hoods. With this paradigm shift, an important question has arisen: Is it possible to accurately sum up the richness and complexity of all the sensors that autonomous vehicles need, such as lidars and robotics. More sparse event-based images, correctly aggregated?

Lidar sensor data is much harder to interpret in a data-driven world – you’re trying to create brand new 3D point clouds with millions of points, just from sparse views of the world. To synthesize 3D lidar point clouds, the team used the data the vehicle collected, projected it into the 3D space that came from the lidar data, and then let a new virtual car drive around locally from where the lidar data was located. original car. Finally, they reprojected all that sensory information into the frame of this new virtual medium, with the help of a neural network.

Along with emulating event-based cameras, which operate at a rate greater than thousands of events per second, the simulator is not only capable of simulating this multimodal information, but also doing it all in real time. real-time – helps with offline neural net training, but also online testing in cars in augmented reality settings for safety assessment. “The question of whether multisensor simulation at this complex scale and opticalism is possible in the field of data-driven simulation is an open question,” Amini said.

With that, the driving school becomes a party. In simulation you can move around, have different types of controllers, simulate different types of events, create interactive scenarios and just drop in brand new vehicles that even not in the original data. They tested the ability to follow lanes, turn lanes, follow cars, and more difficult situations like static and dynamic overtaking (seeing obstacles and moving around so you don’t collide). With multi-agent, both real and simulated agents interact with each other, and new agents can be brought into the scene and controlled in any number of ways.

Taking their full-scale vehicle out into the “wildlands” – aka Devens, Massachusetts – the team saw immediate deliverable results, with both failures and successes. They can also demonstrate the wonderful and wondrous word of self-driving cars: “powerful”. They show that AVs, trained entirely in VISTA 2.0, are so powerful in the real world that they can handle the elusive tail end of challenging failures.

Now, a railing on which humans lean that has yet to be simulated is human emotion. It’s the wave of friendliness, the nod or the blink of an eye of acknowledgment, which are the kinds of nuances the team wants to work on in the future.

“The algorithm at the heart of this study is how we can take the dataset and build a fully synthetic world for learning and autonomy,” Amini said. “It’s a platform that I believe could one day extend to many different axes in the robotics field. Not only autonomous driving, but many fields rely on vision and complex behaviors. We are excited to release VISTA 2.0 to help the community collect their own datasets and transform them into virtual worlds where they can directly simulate their own virtual autonomous vehicles, driving vehicles. around these virtual terrains, train autonomous vehicles in these worlds, and then be able to directly transfer them to full-sized real self-driving cars. ”

Written by Rachel Gordon

Source: Massachusetts Institute of Technology