Minerva: Solving quantitative reasoning problems with language models

Language models have mastered many natural language tasks. However, quantitative reasoning has been said to be unsolvable using current techniques. A recent Google AI paper introduces Minerva, a language model capable of solving math and science questions using step-by-step inference.

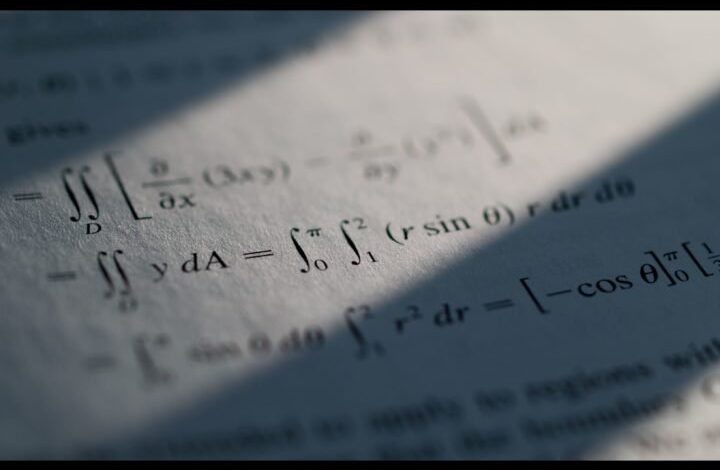

Mathematics – artistic impression. Image credits:

Peter Rosbjerg via FlickrCC BY-ND 2.0

This model is built on top of the Language Pathways Model, which was further trained on a 118GB dataset of scientific articles from arXiv and web pages containing mathematical expressions. It also incorporates recent prompting and evaluation techniques, such as chaining of thought or majority voting, to better tackle math questions.

Reviews of issues at the undergraduate and graduate levels covering a wide variety of STEM topics show that Minerva achieves state-of-the-art, sometimes wildly disparate results. Models like Minerva have many possible applications, from serving as facilitators for researchers to creating new learning opportunities for students.

Link: https://ai.googleblog.com/2022/06/minerva-solving-quantity-reasoning.html

Featured post