IPCC AR6: Breaking the hegemony of global climate models

by Judith Curry

A rather astonishing conclusion drawn from reading the fine print of the IPCC AR6 WG1 Report.

Well, I’ve been reading the fine print of the IPCC AR6 WG1 Report. The authors are to be congratulated for preparing a document that is vastly more intellectually sophisticated than its recent predecessors. Topics like ‘deep uncertainty,’ model ‘fitness-for-purpose’ (common topics at Climate Etc.) actually get significant mention in the AR6. Further, natural internal variability receives a lot of attention, volcanoes a fair amount of attention (solar not so much).

If we harken back to the IPCC AR4 (2007), global climate models ruled, as exemplified by this quote:

“There is considerable confidence that climate models provide credible quantitative estimates of future climate change, particularly at continental scales and above.”

The IPCC AR4 determined its likely range of climate sensitivity values almost exclusively from climate model simulations. And its 21st century projections were determined directly from climate model simulations driven solely by emissions scenarios.

Some hints of concern about what the global climate models are producing were provided in the AR5. With regards to climate sensitivity, the AR5 included this statement in a footnote to the SPM:

“No best estimate for equilibrium climate sensitivity can now be given because of a lack of agreement on values across assessed lines of evidence and studies.”

More specifically, observationally-based estimates of ECS were substantially lower than the climate model values.

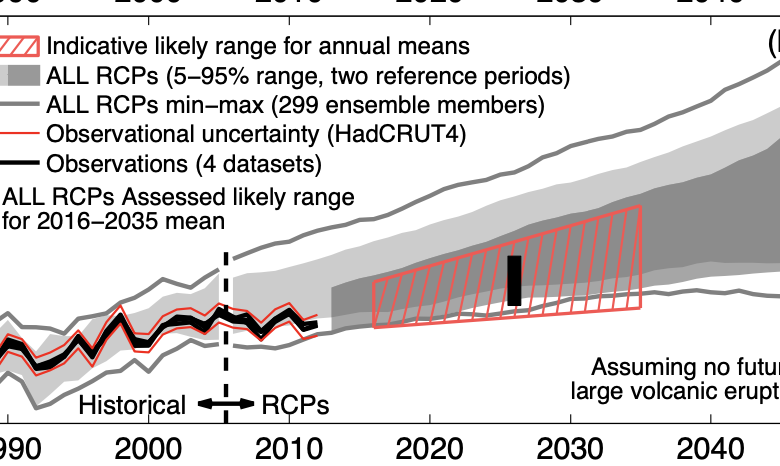

Perhaps more significantly, figure 11.25 in the AR5 included a subjective red-hatched area determined from ‘expert judgment’ that the climate models were running too hot. It is noted that the projections beyond 2035 were not similarly adjusted.

IPCC AR6 – global warming

The IPCC AR6 takes what was begun in the AR5 much further.

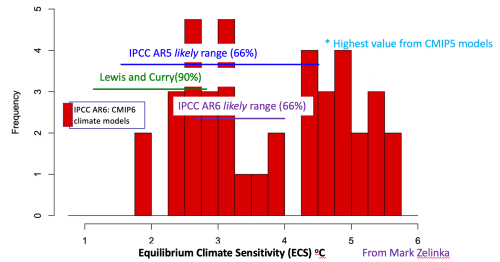

With regards to equilibrium climate sensitivity, the AR6 breaks with the long-standing range of 1.5-4.5C and narrows the ‘likely’ range to 2.5-4.0 C. Here is how that range compares with previous estimates and also the CMIP6 models (as analyzed by Mark Zelinka):

The AR6 analysis of ECS was influenced heavily by Sherwood et al. (2020). I agree with dropping the top value down from 4.5 to 4.0C. However, I do not agree with their rationale for raising the lower value from 1.5 to 2.5C. Without going into detail on my concerns here, I note that Nic Lewis is working on an analysis of this. But the main significance of AR6’s narrower range is the lack of influence of the CMIP6 ECS values.

A substantial number of the CMIP6 models are running way too hot, which has been noted in many publications. In its projections of 21st century global mean surface temperatures, the AR6 provides ‘constrained’ projections (including climate models with reasonable values of climate sensitivity that reasonably simulate the 20th century). Figure 4.11 from the AR6 shows the magnitude of the constraints. For SSP5-8.5, the magnitude of the constrained relative to the unconstrained CMI6 is 20%.

For the first time, CMIP6 includes actual scenarios of volcanic activity and solar variability. CMIP6 includes a background level of volcanic activity (no major eruptions) and an actual projection of 21st century solar variability from Matthes (2017) (discussed previously here), although few models are up to the task of credibly handling solar indirect effects. The AR6 only considers these baseline solar and volcano scenarios; the other volcanic scenarios (shown in Figure 1, Box 4.1 of the AR6) and the Maunder minimum scenario from Matthes (2017) are surely more plausible than SSP5-8.5 and hence should have been included in the projections.

The AR6 also acknowledges the importance of natural internal variability, in many of the Chapters. CMIP6 included Single Model Initial Condition Large Ensembles (SMILEs; section 6.1.3). However, there are substantial disparities between the large-scale circulation variability in observations versus most models (IPCC AR6 Chapter 3) – decadal variability that is too strong and multi-decadal and centennial variability that is too weak. A few of the models seem to do a pretty good job, notably GFDL.

Here are the ensemble forecasts for SSP2-4.5, including the projections from the individual models, the ‘constrained’ versus ‘unconstrained’ 90% range, and the AR6 best estimate (note this image was pulled from a CarbonBrief article). The AR6 best estimate is near the lower end of the entire range; this bias doesn’t allow much scope for natural variability (particularly of the multi-decadal variety) at the lower end of the model range to truly illustrate a realistic time range as to when we might pass the 1.5 and 2C ‘danger’ thresholds.

To minimize some of the problems related to constraining the projections, there is an emphasis on assessing impacts at different levels of global warming, e.g. 2, 4 degrees C.

Regional projections

The IPCC AR6 report provides a substantial emphasis on regional climate change (Chapters 10, 12). The focus is on a distillation of diverse sources of information and multiple lines of evidence, and indirectly acknowledges that global climate models aren’t of much use for regional projections.

Climate emulators

Since the Special Report on 1.5 degrees, the IPCC has increasingly emphasized the use of climate emulators, which are highly simplified climate models (see this CarbonBrief article for an explainer) that are tuned to the results of the global general circulation model based Earth System Models. These models are very convenient for policy analysis, enabling pretty much anyone to run many different scenarios.

And there’s no reason why this general framework couldn’t be expanded to include future scenarios of warming/cooling related to volcanoes and solar, and also multi-decadal internal variability. This framework could be very useful for regional climate projections.

However, climate emulators are not physics-based models.

Are global climate models the best tools?

Text from an essay I am writing:

In the 1990’s, the perceived policy urgency required a quick confirmation of dangerous human-caused climate change. GCMs were invested with this authority by policy makers desiring a technocratic basis for their proposed policies. Shackley et al. However, both the scientific and policy challenges of climate change are much more complex than was envisioned in the 1990’s. The end result is that the climate modeling enterprise has attempted a broad range of applications driven by needs of policy makers, using models that are not fit for purpose.

Complex computer simulations have come to dominate the field of climate science and its related fields, at the expense of utilizing traditional knowledge sources of theoretical analysis and challenging theory with observations. In an article aptly titled ‘The perils of computing too much and thinking too little,’ LINK atmospheric scientist Kerry Emanuel raised the concern that inattention to theory is producing climate researchers who use these vast resources ineffectively, and that the opportunity for true breakthroughs in understanding and prediction is being diminished.

Complexity of model representation has become a central normative principle in evaluating climate models and their policy utility. However, not only are GCMs resource-intensive and intractable to interpret, they are also pervaded by over parameterization and inadequate attention to uncertainty.

The numerous problems with GCMs, and concerns that these problems will not be addressed in the near future given the current development path of these models, suggest that alternative model frameworks should be explored. We need a plurality of climate models that are developed and utilized in different ways for different purposes. For many issues of decision support, the GCM centric approach may not be the best approach. However, a major challenge is that nearly all of the resources are being spent on GCMs and IPCC production runs, with little time and funds left over for model innovations.

The policy-driven imperative of climate prediction has resulted in the accumulation of power and authority around GCMs (Shackley), based on the promise of using GCMs to set emissions reduction targets and for regional predictions of climate change. However, the IPCC is increasingly relying on much simpler models for setting emissions targets. The hope for useful regional predictions of climate change using GCMs is unlikely to be realized based on the current path of model development.

With regards to fitness for purpose of global/regional climate models for climate adaptation decision making, an excellent summary is provided by a team of scientists from the Earth Institute and Red Cross Climate Center of Columbia University:

“Climate model projections are able to capture many aspects of the climate system and so can be relied upon to guide mitigation plans and broad adaptation strategies, but the use of these models to guide local, practical adaptation actions is unwarranted. Climate models are unable to represent future conditions at the degree of spatial, temporal, and probabilistic precision with which projections are often provided which gives a false impression of confidence to users of climate change information.” (Nissan et al.)

GCMs clearly have an important role to play particularly in scientific research. However, driven by the urgent needs of policy makers, the advancement of climate science is arguably being slowed by the focus of resources on this one path of climate modeling. The numerous problems with GCMs, and concerns that these problems will not be addressed in the near future given the current development path, suggest that alternative frameworks should be explored . This is particularly important for the science-policy interface.

JC reflections

In the AR5, the emphasis was on the Earth Systems Models, and their ever growing complexity in terms of adding more chemistry and some ice sheet dynamics.

In AR6, these complex climate models are revealed for what they are: very complicated and computationally intensive toys, whose main results are dependent on fast thermodynamic feedback processes (water vapor, lapse rate, clouds) that are determined by subgrid-scale parameterizations and and the inevitable model tuning.

With the very large range of climate sensitivity values provided by the CMIP6 models, we are arguably in a period of negative learning. And this is in spite of the IPCC AR6 substantially reducing the range of ECS from the long-standing 1.5-4.5C to 2.5 to 4C (reminder: I am not buying this reduction on the low end, more on this soon).

So what are we left with?

- Global climate models (ESMs) remain an important tool for understanding how the climate system works. However, we have reached the point of diminishing returns on this unless there is more emphasis on improving the simulation of modes of internal climate variability and advancing the treatment of solar indirect effects.

- We should abandon ECS as a policy-relevant metric and work on better understanding and evaluation of TCR and TCRE from historical data.

- In context of #1, I question whether the CMIP6 ESMs have much use in attribution studies.

- ESMs have lost their utility for policy applications. Policy applications are far more usefully achieved with climate emulator models. However, the use of climate emulators distances policy making from a basis in physics. This is particularly relevant for the legal status in various climate lawsuits of 21st century climate projections and the ESMs in various climate lawsuits.

While this is hidden in the Summary for Policy Makers, it is pretty significant:

“A.1.3 The likely range of total human-caused global surface temperature increase from 1850–1900 to 2010–2019 is 0.8°C to 1.3°C, with a best estimate of 1.07°C. It is likely that well-mixed GHGs contributed a warming of 1.0°C to 2.0°C, other human drivers (principally aerosols) contributed a cooling of 0.0°C to 0.8°C, natural drivers changed global surface temperature by –0.1°C to 0.1°C, and internal variability changed it by –0.2°C to 0.2°C. It is very likely that well-mixed GHGs were the main driver of tropospheric warming since 1979, and extremely likely that human-caused stratospheric ozone depletion was the main driver of cooling of the lower stratosphere between 1979 and the mid-1990s.”

Compare this to the statements in the AR5 SPM:

“It is extremely likely that more than half of the observed increase in global average surface temperature from 1951 to 2010 was caused by the anthropogenic increase in greenhouse gas concentrations and other anthropogenic forcings together. The best estimate of the human-induced contribution to warming is similar to the observed warming over this period.”

Overall, the AR6 WG1 report is much better than the AR5, although I remain unimpressed by their increased confidence in a narrower range of ECS.

The bottom line is that the AR6 has broken the hegemony of the global climate models. The large amount of funding supporting these models towards policy objectives just became more difficult to justify.