Impact of artificial intelligence on healthcare

Artificial intelligence (AI) has extraordinary potential to enhance healthcare, from improvements in medical diagnosis and treatment to assisting surgeons at every stage of the surgical action, from prepared to completion.

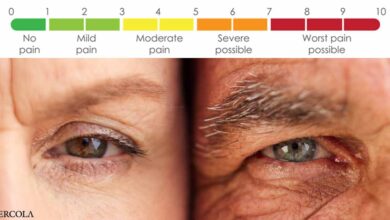

With machine learning and deep learning, algorithms can be trained to recognize certain pathologies, such as melanoma for skin cancer, and with clear and recorded data sets, AI can also be used to analyze images to detect disease from images.

As a result, AI helps optimize the allocation of human and technical resources.

Furthermore, AI’s use of massive data improves patient prognosis and treatment choice, by tailoring treatment to the disease and individual characteristics.

Dr. Harvey Castro, a physician and healthcare consultant, points out that the recent integration of Microsoft’s Azure OpenAI service with Epic’s electronic health record (EHR) software is proof of that. See that general AI is also making important breakthroughs in the healthcare sector.

“One use case could be patient classification, where the AI is really like a resident, where the doctor talks and it takes all the information down and uses the capture capabilities,” he said. its algorithms to start classifying those patients.” “If you have 100 patients in the waiting room, there’s a lot of information coming in – you’ll be able to start prioritizing even if you haven’t seen the patient.”

“If you have 100 patients in the waiting room, there’s a lot of information coming in – you’ll be able to start prioritizing even if you haven’t seen the patient.”

Dr. Harvey Castro, physician and health consultant

The key, Castro adds, is that any application of AI makes sense and improves clinical care, rather than being deployed as a “shiny new tool” that doesn’t help doctors. clinical or patient.

He sees a future where large language models (LLMs) – large amounts of unlabeled text that help form the basis of neural networks used by AI – are created specifically for use in healthcare. health care.

“One of the problems with ChatGPT is that it’s not designed for healthcare,” says Castro. “For healthcare, it needs to be consistently accurate LLM, less prone to hallucinogenic problems, and based on data from a database that can be referenced and unambiguous.”

The term ‘hallucination’ refers to when an AI system gives meaningless or unrealistic responses or outputs.

In his view, the future of healthcare will be marked by evolving LLMs with more predictive analytics and the ability to look at genetic makeup, medical history, and birthmarks. study of an individual.

The importance of regulation

Eric Le Quellenec, Simmons & Simmons partner, AI and healthcare, explains that regulation can ensure AI is used in a way that respects freedoms and fundamental rights.

The proposed EU AI Act, expected to be passed in 2023 and to be applied in 2025, sets out the first regulatory framework in Europe for the technology. A draft proposal was presented by the European Commission in April 2021 and is still under discussion.

However, regulation of AI also falls under other European legislation.

“First, any use of an AI system in connection with the processing of personal data is subject to the General Data Protection Regulation (GDPR),” he said.

Because health data is considered sensitive data and is used on a large scale, the regulation requires a data protection impact assessment (DPIA).

“It’s a method of reducing risk and by doing so, it’s easy to go beyond the scope of data protection and the ethics within,” added Le Quellenec, noting that the watchdog protects France’s data protection agency has provided a self-assessment fact sheet, as has the Information Commissioner’s Office (ICO) in the UK.

He added that the UNESCO Recommendation on the Ethics of Artificial Intelligence, published in November 2021, is also noteworthy.

“At this point, all of this is just ‘soft law’ but good enough for stakeholders to have reliable data to be used for AI processing and to avoid many risks such as ethnic bias, sociology and economics,” he continued.

In Le Quellenec’s view, the proposed EU AI Act, after it is passed, should follow a risk-based approach, distinguishing between the use of AI creating unacceptable risks, risks high risk and low or minimal risk, and establish a list of prohibited behaviors all AI systems deemed unusable.

“AI used for healthcare is considered high risk,” explains Le Quellenec. “Before being put on the European market, high-risk AI systems will have to be controlled by obtaining CE certification.”

He believes that high-risk AI systems should be designed and developed in a way that ensures that their operation is transparent enough to allow users to interpret the system’s output and use it appropriately. .

“All of that will also instill confidence in the public and promote the use of AI-related products,” noted Le Quellenec. “In addition, human surveillance will aim to prevent or reduce risks to health, safety or fundamental rights that may arise when high-risk AI systems are used. .”

This will ensure the results provided by the AI system and algorithms are used only as an aid and do not result in the practitioner losing autonomy or undermining medical practice.

Castro and Le Quellenec will both speak on the topic of AI at the HIMSS European Medical Exhibition and Conference in Lisbon on June 7-9, 2023.