I am designing an experiment to illustrate how ‘homogenization’ creates a false climate trend – suggestions welcome – Do you support that?

Those of you who have worked with WUWT for a few years know that I often enjoy doing hands-on experiments to illustrate and counter some of the most absurd climate change claims made in the United States. both sides of the aisle. On the alarm side, you can remember this:

Al Gore and Bill Nye Fail in a Simple CO2 Experiment

Reproduction of Al Gore’s Climate 101 test video (from 24 hours Gore-a-thon) shows that his “high school physics” can never work as advertised

Unfortunately, YouTube has turned off the video, but I will try posting it in other places such as on Rumble. The graphs of the temperature measurements and other images are still there.

Despite the fact that I have proven without a doubt that the test is not only subject to serious flaws, but also really FAILtoday they are still using it for propaganda on Al Gore’s website.

They never took it down. Schmucks.

So along those lines, like Willis used to do, I was thinking about Recent articles published in Atmosphere by some of the brothers in our arms (Willie Soon, The Connallys, etc) climate skeptics,

abstract

The widely used Global Historical Climate Network (GHCN) monthly temperature data set is available in two formats — heterogeneous and homogeneous. Since 2011, this homogenous dataset has been updated almost daily by applying the “Pairs Homogeneity Algorithm” (PHA) to the heterogeneous data sets. Previous studies showed that PHA could work well in correcting synthetic time series when some artificial biases were introduced. However, its performance with real world data has been little studied. As a result, the homogeneous GHCN dataset (Versions 3 and 4) was downloaded almost daily over a 10-year period (2011–2021), yielding 3,689 distinct updates to the dataset. The different breakpoints identified were analyzed for a set of stations from 24 European countries with station historical metadata. A notable inconsistency in the identified breakpoints (and thus the adjustments applied) was revealed. Of the adjustments applied to GHCN Version 4, 64% (61% for Version 3) were identified on less than 25% of the runs, while only 16% of the adjustments (21% for Version 3 ) was consistently determined for more than 75% of the runs. Consistency of PHA adjustments is improved when breakpoints corresponding to station history metadata events are recorded. However, only 19% of breakpoints (18% for Version 3) were associated with an event logged within 1 year, and 67% (69% for Version 3) were not associated with any any recorded events. Thus, while PHA remains a useful tool in the community homogenization toolbox, many of the PHA adjustments applied to the homogenized GHCN dataset may have been tampered with. Using station metadata to evaluate the reliability of PHA adjustments can help identify some of these spurious adjustments.

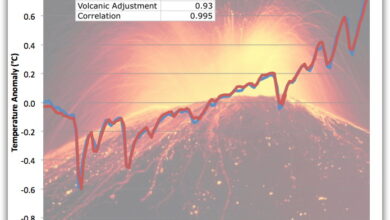

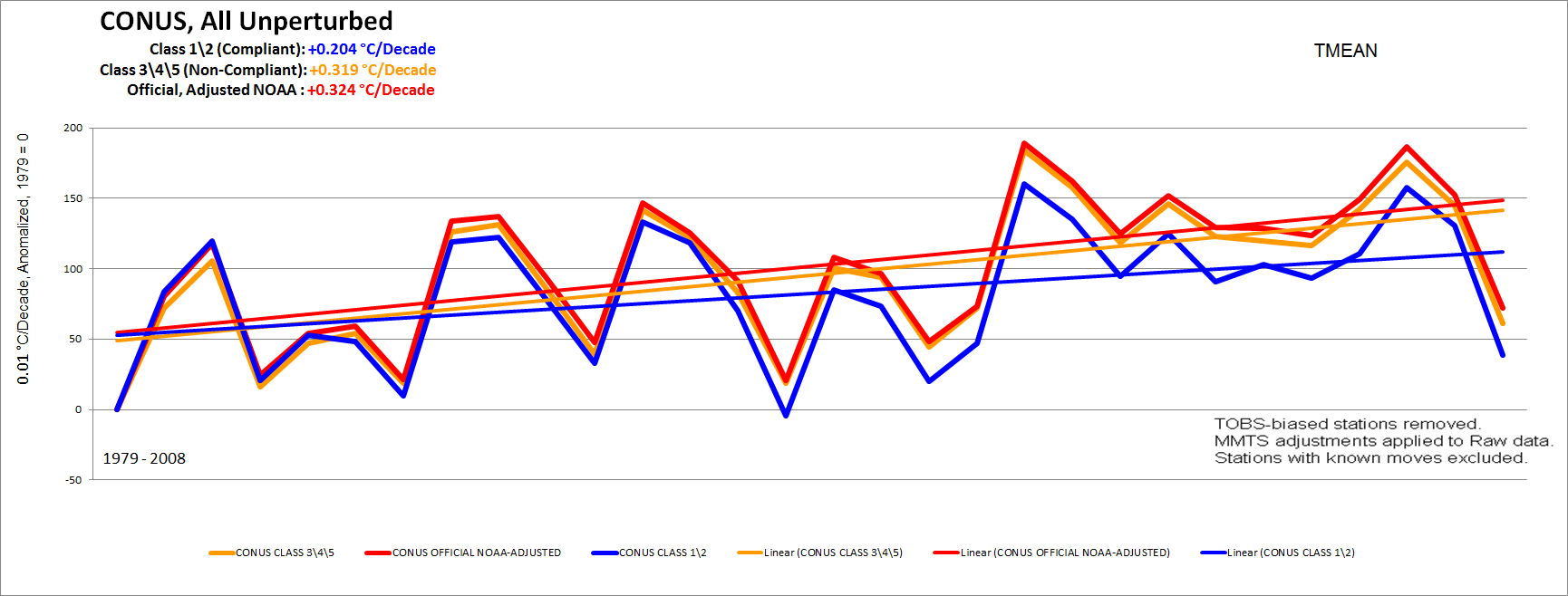

In summary, they conclude that the homogenization process produces artificial biases to the long-term temperature profile. This is what I surmised over 10 years ago with USHCN, and published at AGU 2015 with this graph, which shows the end product of a homogeneous data much warmer than non-urbanized stations and covered man-made surfaces such as asphalt, concrete and buildings. According to my analysis, almost 90% of the entire USHCN network is not geolocation compliant and thus suffers from the spurious effects of nearby heat sources and radiators.

In the new paper, here’s a related paper on the chart that I published in 2015 at AGU:

As a result, the more breakpoints are adjusted for each record, the more likely the trend of that record will be to converge with the trends of its neighbors. Initially, this seemed desirable because of the tendency for records to be more homogenized (supposedly one of the main goals of “homogeneity”), and thus some objected to the suggestion of homogeneity. this quote [41]. However, if many neighbors are systematically affected by similar long-term non-climate biases, homogenous trends will tend to converge towards the mean of the station network ( including the systemic component), rather than looking at actual regional climate trends.

The key phrase is “multiple neighbors, i.e. neighboring stations.

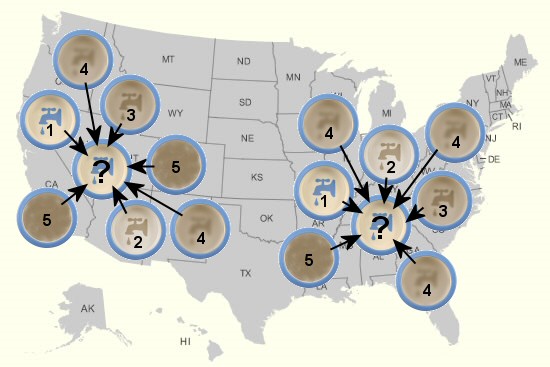

Back on August 1, 2009, I made an analogy on this issue with reverence by using dirty water bowls. If the cleanest water (a good, well-placed station) is homogenized with nearby stations that have different turbidity due to contaminants in the water, with 5 being the worst, then the homogenization will mix clean water and effectively dirty, and you end up with a data point for the station labeled “?” it’s some degree of turbidity, but not obvious. It’s basically a combination of data between clean data and dirty data, resulting in muddy water or scrambled data.

During the homogenization process, the data is weighted relative to the neighboring neighbors in the radius. And so a station may start as a data wise “1”, may eventually be contaminated with the data of neighboring stations and end up as a new value, say weighted of “2.5”.

In the map below, applying the uniform smoothing method, weighting the stations according to the proximity of the stations with the question mark, how would you imagine their (turbidity) value? And, how close would these two values be for the east coast station and the west coast station in question? Each value will be close to the smoothed central mean based on neighboring stations.

Of course, this is not the actual method, just a visual analogy. But basically what this new paper says is happening with the temperature data.

And, it’s not just me and this new newspaper saying this, in 2012 I reported in another newspaper saying the same thing.

New paper blames about half of global warming on weather station data homogenization

The authors Steirou and Koutsoyiannis, after accounting for homogenization errors, found that global warming over the past century was only about half. [0.42°C] of which are declared by the IPCC [0.7-0.8°C].

Here’s the part I really liked: of the 67% of weather stations tested, problematic adjustments were made to the raw data resulting in:

“The positive trend is up, the negative trend is down, or the negative trend is changed to positive”, while “the expected ratio is 1/2 (50%).”

And…

“The homogenization methods used to this day are mostly statistical, not well proven by experiments, and rarely supported by metadata. It can be argued that they often lead to false results: the natural features of the hydro-climatological time series are considered to be biased and corrected”.

So from my point of view, it’s clear that homogenization is adding to the warming of a pseudo-climate, where there’s really no real climate signal. Instead, it is taking on the urbanization effect that leads to a warming of average temperatures and adding it to the climate signal.

Steve McIntyre concurs in a parcelWrite:

Finally, when reference information from neighboring stations is used, artifacts at neighboring stations tend to cause tuning errors: the “bad neighbor” problem. In this case, after tuning, the climate signal becomes more similar in neighboring stations even if the average bias across the network does not decrease.

So I want to design an experiment to simulate and illustrate the “bad neighbor” problem with weather stations and make a video about it.

I am thinking of the following:

- Using the turbidity analogy in a way, maybe using red and blue food coloring instead of a suspended particle, which will settle. This is purely for visualization.

- Use actual temperature, by creating temperature controlled water bottles at different temperatures.

- Mix the ingredients in the vial and measure the resulting turbidity/color change and the resulting temperature of the mixture.

The trick is how to make individual temperature controlled water bottles and maintain that temperature. Some lab equipment, some pipes and some pumps will be needed.

Again purely for visual effects, I could create a map of the US or the world, place the jars in it and use it to visualize the results and measure the results.

I welcome a discussion of ideas on how to do this correctly and convincingly.